Introducing a new service partition on Sherlock

New

Scheduler

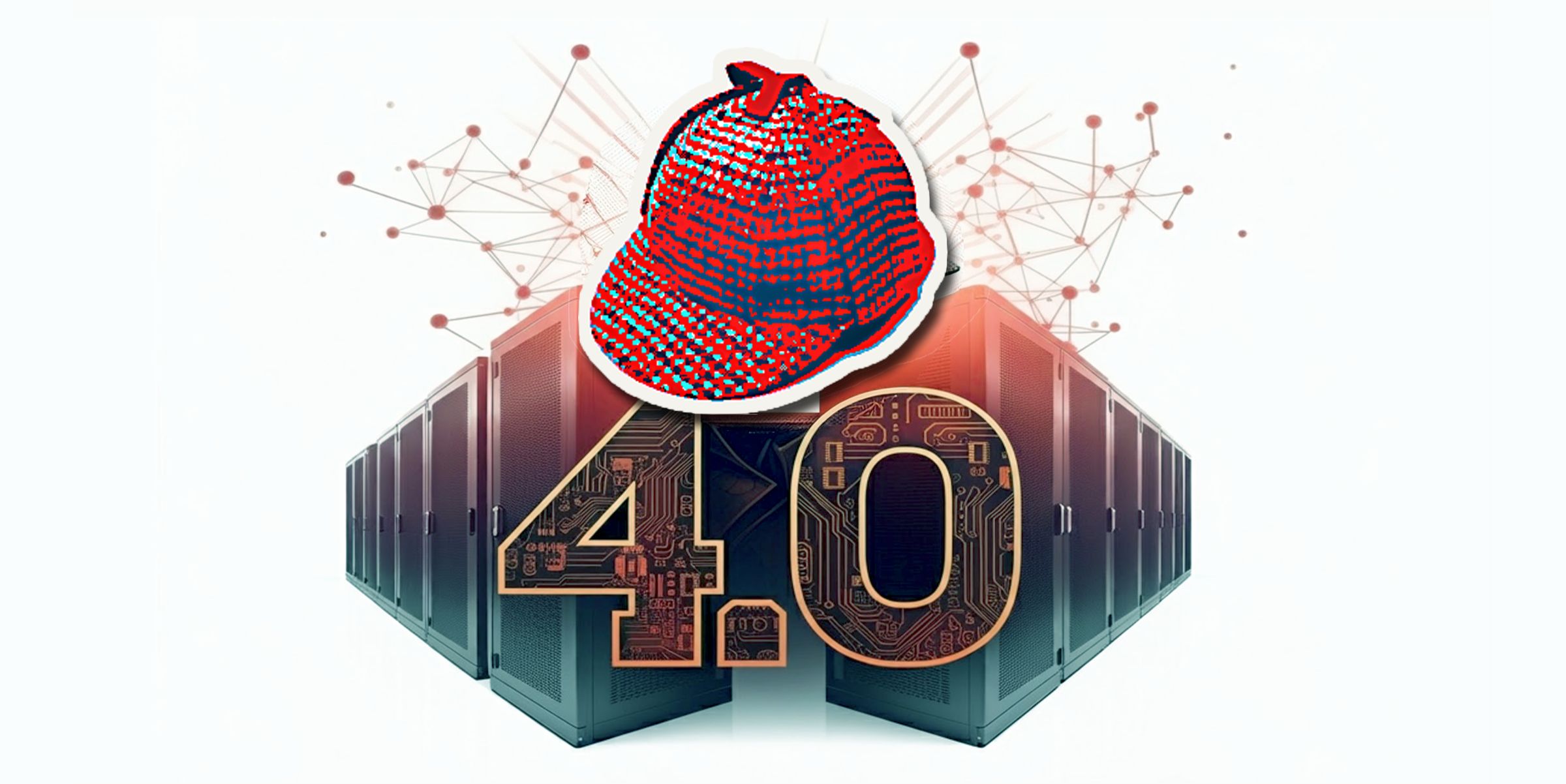

Sherlock 4.0: a new cluster generation

New

Announce

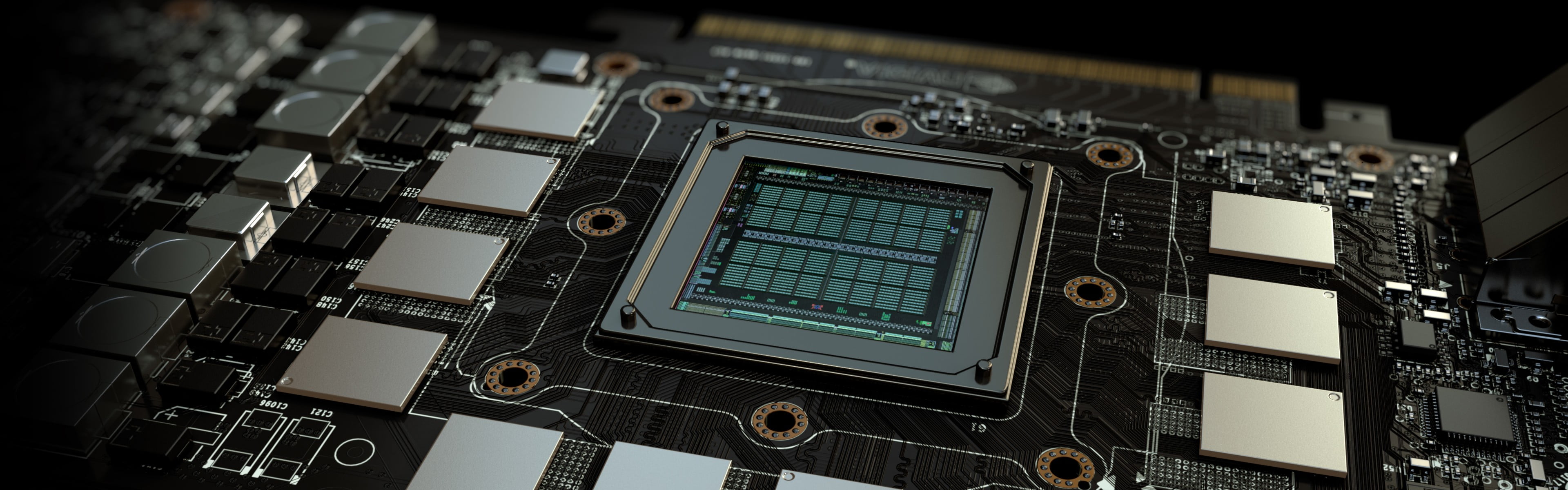

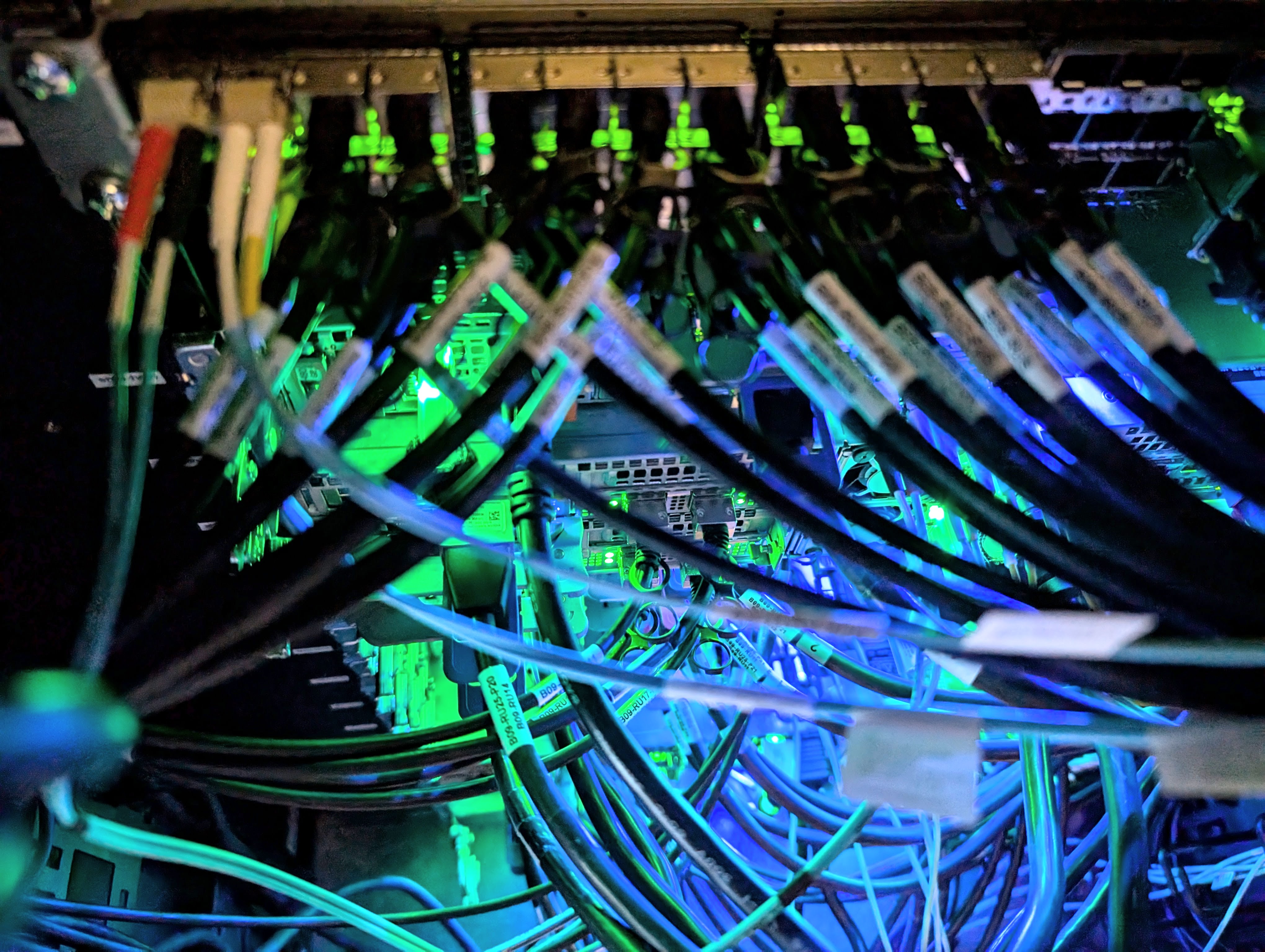

Hardware

Storage quota units change: TB to TiB

Sherlock 4.0 is coming!

New

Hardware

Sherlock goes full flash

Data

Hardware

Improvement

Final hours announced for the June 2023 SRCF downtime

Maintenance

Announce

Instant lightweight GPU instances are now available

New

Hardware