Sherlock welcomes Volta

1522454340001

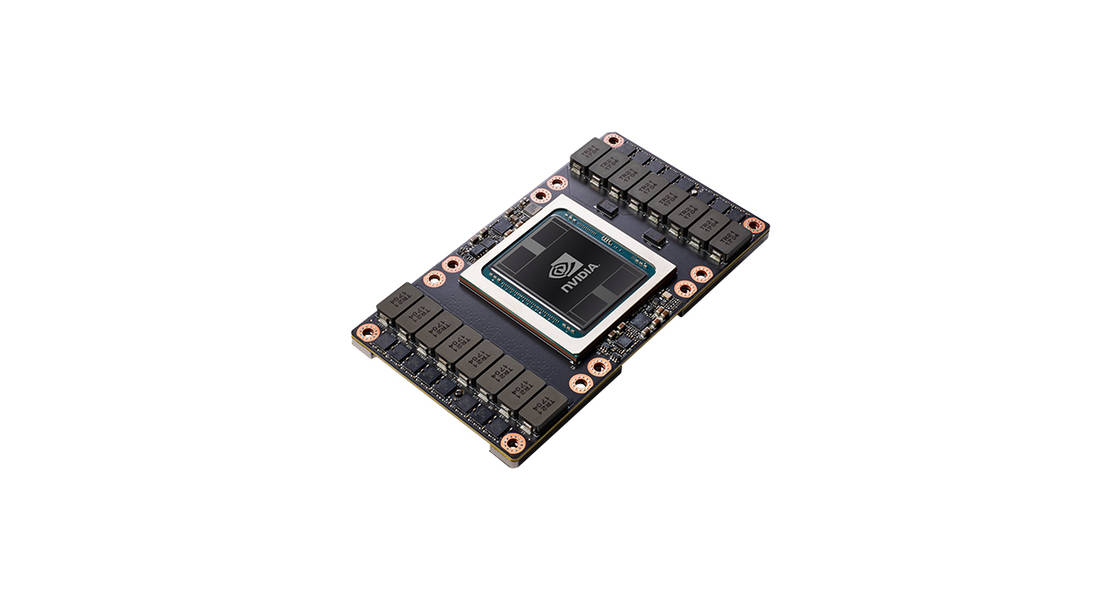

The Sherlock 2.0 cluster now features the latest generation of GPU accelerators: the NVIDIA Tesla V100.

Each V100 GPU features 7.8 TFlops of double-precision (FP64) performance, up to 125 TFlops for deep-learning applications, 16GB of HBM2 memory, and 300GB/s of interconnect bandwidth using NVLink 2.0 connections.

Three new compute nodes, each featuring 4x V100 GPUs, and using a completely non-blocking NVLink topology, have been added to the publicly available GPU partition, and are available to run jobs now.

Details

Those nodes are now available to everyone in the gpu partition, and could be requested by adding the -C GPU_SKU:V100_SXM2 flag to your job submission options.

To request an interactive session on a V100 GPU, you can run:

$ srun -p gpu --gres gpu:1 -C GPU_SKU:V100_SXM2 --pty bash To see the list of all the available GPU features and characteristics that can be requested in the gpu partition:

$ sh_node_feat -p gpu | grep GPU GPU_BRD:TESLA GPU_CC:6.0 GPU_CC:6.1 GPU_CC:7.0 GPU_GEN:PSC GPU_GEN:VLT GPU_MEM:16GB GPU_MEM:24GB GPU_SKU:P100_PCIE GPU_SKU:P40 GPU_SKU:V100_SXM2 For more details about GPUs on Sherlock, see the GPU user guide.

If you have any question, feel free to send us a note at [email protected]