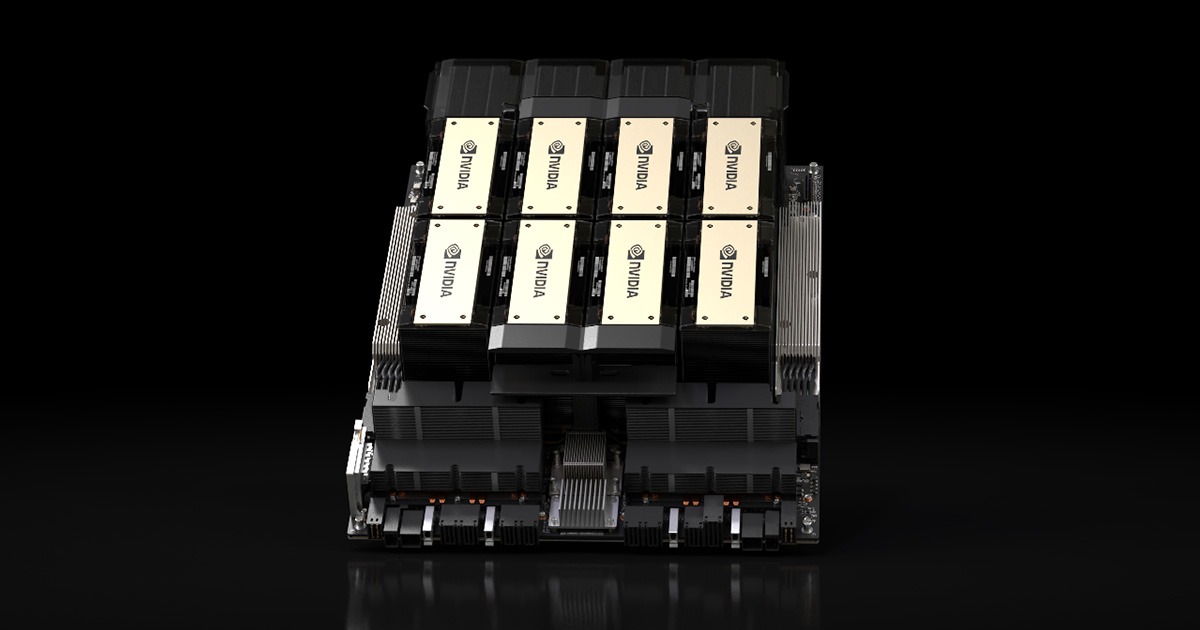

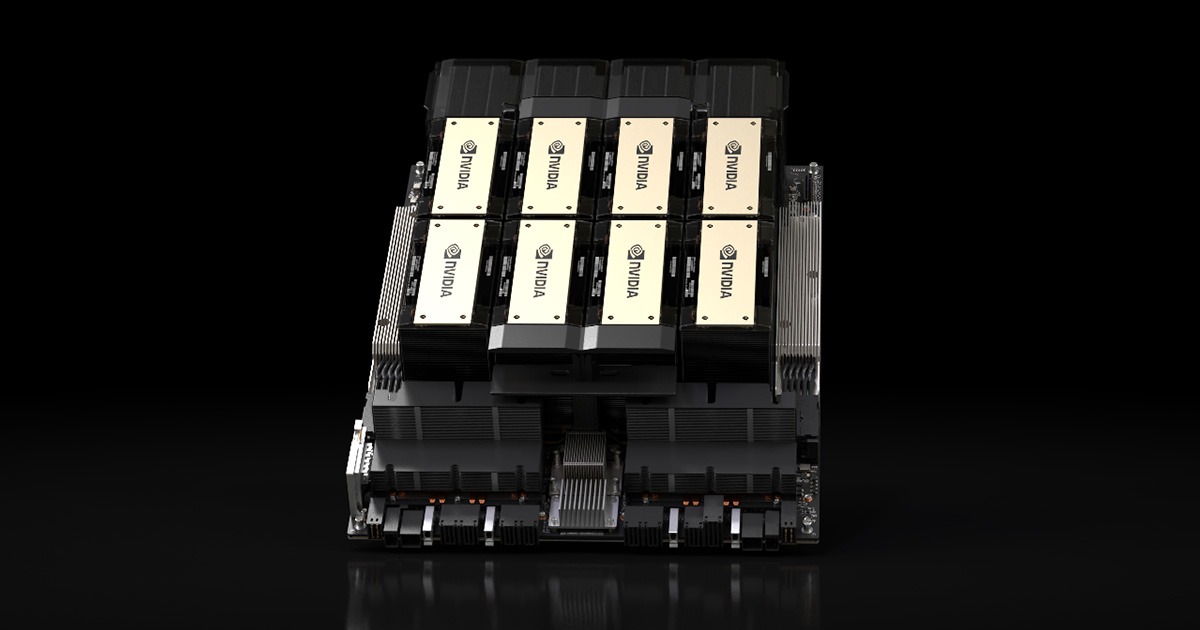

Introducing SH4_G8TF64.1, now with 8x H200 GPUs

by Kilian Cavalotti, Technical Lead & Architect, HPC

We are excited to announce the immediate availability of a powerful new node configuration to accelerate your GPU workloads on Sherlock: SH4_G8TF64.1. Featuring 8x NVIDIA H200 Tensor Core GPUs, this new configuration delivers cutting-edge

Subscribe to updates

Subscribe to updates