Sherlock 4.0: a new cluster generation

timestamp1724970751957

We are thrilled to announce that Sherlock 4.0, the fourth generation of Stanford's High-Performance Computing cluster, is now live! This major upgrade represents a significant leap forward in our computing capabilities, offering researchers unprecedented power and efficiency to drive their groundbreaking work.

What's new in Sherlock 4.0?

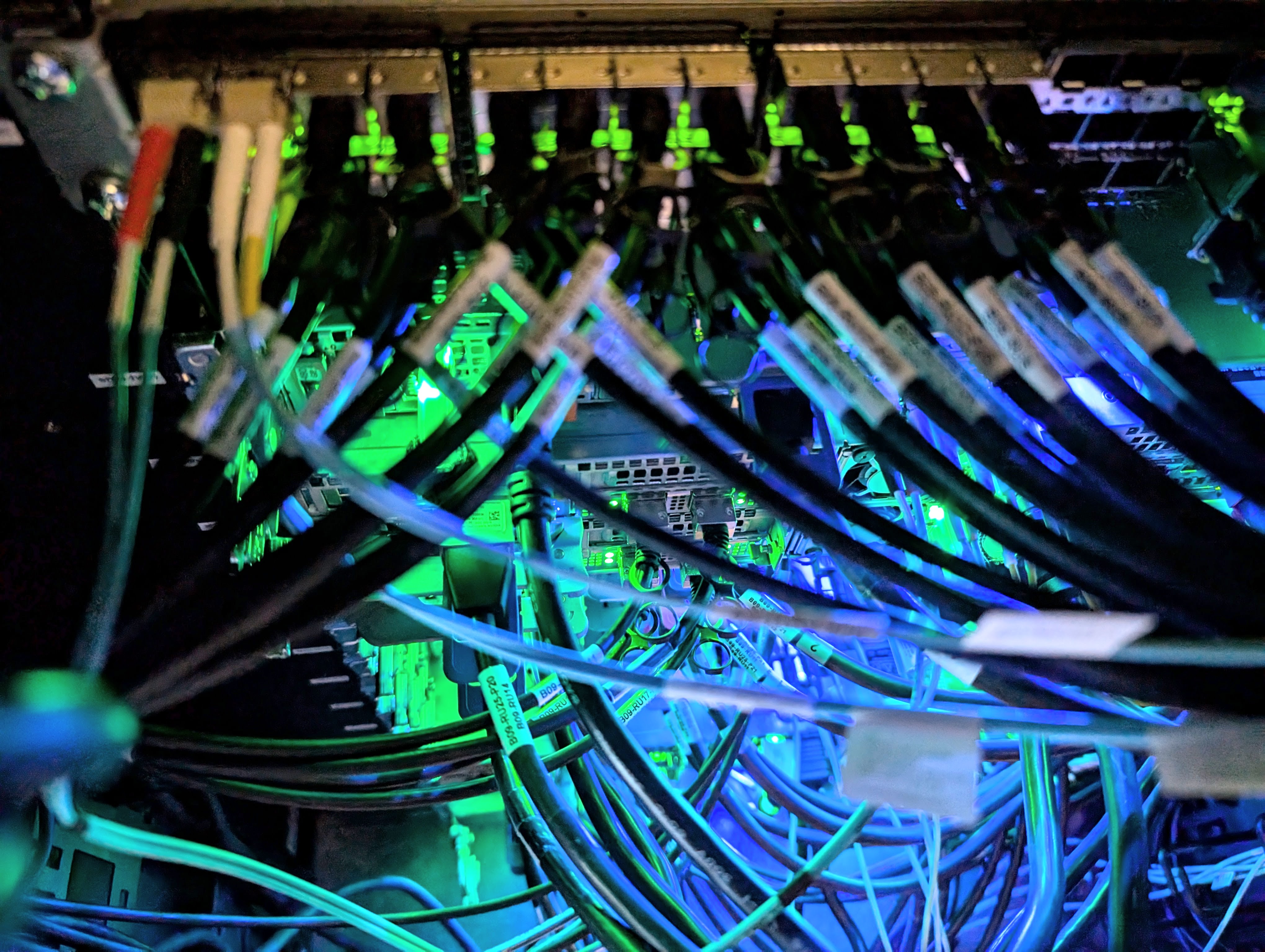

We’ve been hard at work for the last few months, designing a brand new architecture and preparing the ground for the next generation of the cluster. Sherlock 4.0 brings cutting-edge technology to support the Stanford Research community's computing needs.

A new, faster interconnect | Infiniband NDR, 400Gb/s

Sherlock 4.0 introduces a groundbreaking upgrade to its interconnect infrastructure, featuring Infiniband NDR technology that delivers an impressive 400Gb/s of bandwidth. This significant enhancement provides all new nodes with increased bandwidth and reduced latency, benefiting both large-scale parallel MPI applications and access to the $SCRATCHand $OAK parallel file systems.

For the first time in Sherlock’s history, the high-speed interconnect in Sherlock 4.0 is a full fat-tree, non-blocking fabric, which enables line-speed performance across the whole cluster, ensuring uniform connection performance (in terms of bandwidth and latency) between any two nodes in the fabric. Previous cluster generations used blocking fabric designs that created “performance islands”, where nodes in close proximity enjoyed higher bandwidth and lower latency compared to more distant nodes. With Sherlock 4.0, the non-blocking interconnect allows all compute nodes to communicate to communicate at the maximum performance level, regardless of their physical location within the cluster.

Sherlock 4.0 stands among the first HPC clusters worldwide to offer 400Gb/s connectivity to every node. In addition, high-end GPU configurations now feature up to four Infiniband connections per node, delivering an aggregated bandwidth of 1.6 Tb/s to the node.

Latest processors | AMD Genoa and Intel Sapphire Rapids CPUs

The new Sherlock generation introduces a diverse and powerful processor lineup, incorporating both AMD and Intel CPUs to deliver optimal performance, power efficiency, and cost-effectiveness. By leveraging the unique strengths of each CPU family, Sherlock 4.0 compute nodes are tailored to provide enhanced performance and specialized features where they matter most. From high-performance cores with large L3 caches, to many-core nodes boasting up to 256 cores per node, and large-memory nodes including up to 4TB of RAM, the Sherlock 4.0 node configurations cover a broad spectrum of scientific computing needs.

The inclusion of AMD's 4th-generation EPYC (Genoa) and Intel's 5th-generation Xeon (Sapphire Rapids) processors ensures that researchers have access to the latest advancements in CPU technology. This dual-vendor approach allows for greater flexibility in matching computational resources to specific research needs, whether it's high core counts, specialized instruction sets, or optimized power consumption.

Enhanced node-local storage | 3+TB NVMe per node

Leveraging the latest generation of E3s PCIe Gen5 NVMe drives, Sherlock 4.0 takes a significant leap forward in local storage capabilities. Each node now boasts a minimum of 3.5TB of cutting-edge local storage, with up to 30TB for the high-end GPU configurations. This upgrade is particularly beneficial for applications that demand high Input/Output Operations Per Second (IOPS) rates, ensuring faster data access and processing times.

State-of-the-art GPUs | NVIDIA H100 and L40S

Sherlock 4.0 introduces cutting-edge GPU capabilities with the addition of NVIDIA H100 and L40S GPUs. For intensive AI and machine learning workloads, researchers can now leverage nodes equipped with either 8 or 4 NVIDIA H100 GPUs, offering unprecedented computational power for complex model training and inference. A configuration featuring 4 NVIDIA L40S GPUs is also available, specifically optimized for CryoEM and Molecular Dynamics simulations.

More (and faster) login and DTN nodes

We’re also introducing a fleet of 8 new login nodes and 4 new data-transfer nodes (DTNs) with Sherlock 4.0, featuring upgraded 25Gbps connectivity to the Stanford network. This enhancement provides users with improved access, more robust connectivity, and significantly faster data transfer rates when interacting with external systems.

Refreshed and improved infrastructure

The list would be too long to go through exhaustively, but between additional service nodes to better scale the distributed cluster management infrastructure, a brand new 100Gbps Ethernet backbone and improved Ethernet topology between the racks, all the aspects of Sherlock have been rethought and improved.

New catalog and node configurations

Building on the existing node classes, Sherlock 4.0 introduce the following new node configurations:

CPU nodes

SH4_CBASEEntry-level, affordable and most flexible config

1x AMD 8224P Siena, 24 cores, 192GB RAM, 1x 3.84 TB NVME, 1x NDR IBSH4_CPERFHigh-performance cores with large L3 cache

2x AMD 9384X Genoa-X, 64 cores, 384 GB RAM, 1x 3.84TB NVME , 1x NDR IBSH4_CSCALEMany cores

2x AMD 9754 Bergamo, 256 cores, 1.5 TB RAM, 2x 3.84TB NVME, 1x NDR IBSH4_CBIGMEMLarge memory

2x Intel 8462Y+ Sapphire Rapids, 64 cores, 4 TB RAM, 2x 3.84TB NVME, 1x NDR

GPU nodes

SH4_G4FP32Entry-level GPU, FP32 focus for CryoEM/MD

2x Intel 6426Y Sapphire Rapids, 32 cores, 256 GB RAM, 4x NVIDIA L40S GPUs, 1x 7.68TB NVME, 1x NDRSH4_G4TF64GPU node for HPC, AI/ML

2x Intel 8462Y+ Sapphire Rapids, 64 cores, 1 TB RAM, 4x NVIDIA H100 SXM5 GPUs, 2x 7.68TB NVME, 2x NDRSH4_G8TF64Best-in-class GPU config for GenAI/LLM, AI/ML

2x Intel 8462Y+ Sapphire Rapids, 64 cores, 2 TB RAM, 8x NVIDIA H100 SXM5 GPUs, 4x 7.68TB NVME, 4x NDR

What changes in practice

The upgrade to Sherlock 4.0 maintains full compatibility with existing workflows and usage patterns. Users can continue to interact with Sherlock as they have in previous versions, with no changes required in their established routines. Nodes from the previous Sherlock 2.0 and Sherlock 3.0 generations are still up and running, and will continue to support user workloads.

Sherlock is still a single and unified cluster, with the same:

single point of entry at

login.sherlock.stanford.edu,single and ubiquitous data storage space, allowing users to reach their data on all file systems from any node in the cluster,

single application stack, enabling the loading of identical modules and execution of the same software across all Sherlock nodes.

It all stays the same, but Sherlock now features a new island, with a new family of compute nodes. Thanks to the generous sponsorship of the Stanford Provost, we’ve been able to add the following resources to Sherlock’s public partitions:

partition | nodes |

|---|---|

| 16x |

| 2x |

| 1x |

| 1x |

| 4x |

| 2x |

| 1x |

Total | 27 nodes, 1,152 cores, 15TB RAM, 32 GPUs |

Accessing the new nodes

Those 27 new Sherlock 4.0 nodes are already available in the regular public partitions (normal, gpu and bigmem) and have started running jobs. All the new nodes also have specific node features defined, that allow the scheduler to specifically select them when jobs request particular constraints. The full list of available features can be obtained via the sh_node_feat command.

For instance, users could request an interactive session with 8 CPU cores for 2 hours on a SH4_CBASE nodes with:

$ salloc -C CLASS:SH4_CBASE -c 8 -t 2:00:00Requests for orders are now OPEN!

We're also very excited to announce that starting today, Principal Investigators, Departments, and Schools can place requests for their own dedicated Sherlock 4.0 nodes!

The ordering process has not changed: to place an order request or learn more about available configurations and pricing, visit the Sherlock Catalog at http://www.sherlock.stanford.edu/catalog, and fill out the Order request form at http://www.sherlock.stanford.edu/order

More complete details about the purchasing process are available on the Sherlock website.

Order size limits

Please note that each node type is available in finite quantities. So for substantial purchase requests (individual orders of more than 4 nodes, or Institute/School-level orders), please feel free to reach out so we can evaluate the feasibility of those requests. Our priority is to ensure a fair and equitable purchase process , and to balance the needs of individual research groups with the overall demand across the Stanford research community.

What's Next?

Sherlock 4.0 is more than just an upgrade — it's a new chapter in our research computing landscape. We can't wait to see the groundbreaking discoveries and innovations that will emerge from this new era of high-performance computing at Stanford.

We wanted to sincerely thank every one of you for your patience while we were working on bringing up this new cluster generation.We know it’s been a very long wait, but hopefully it will have been worthwhile. And as always, please feel free to reach out with any questions or concerns.

Happy computing, and here's to pushing the boundaries of research with Sherlock 4.0!

P.S. Don't forget to join us on Slack for updates and community discussions about Sherlock!

Did you like this update?

![]()

![]()

![]()