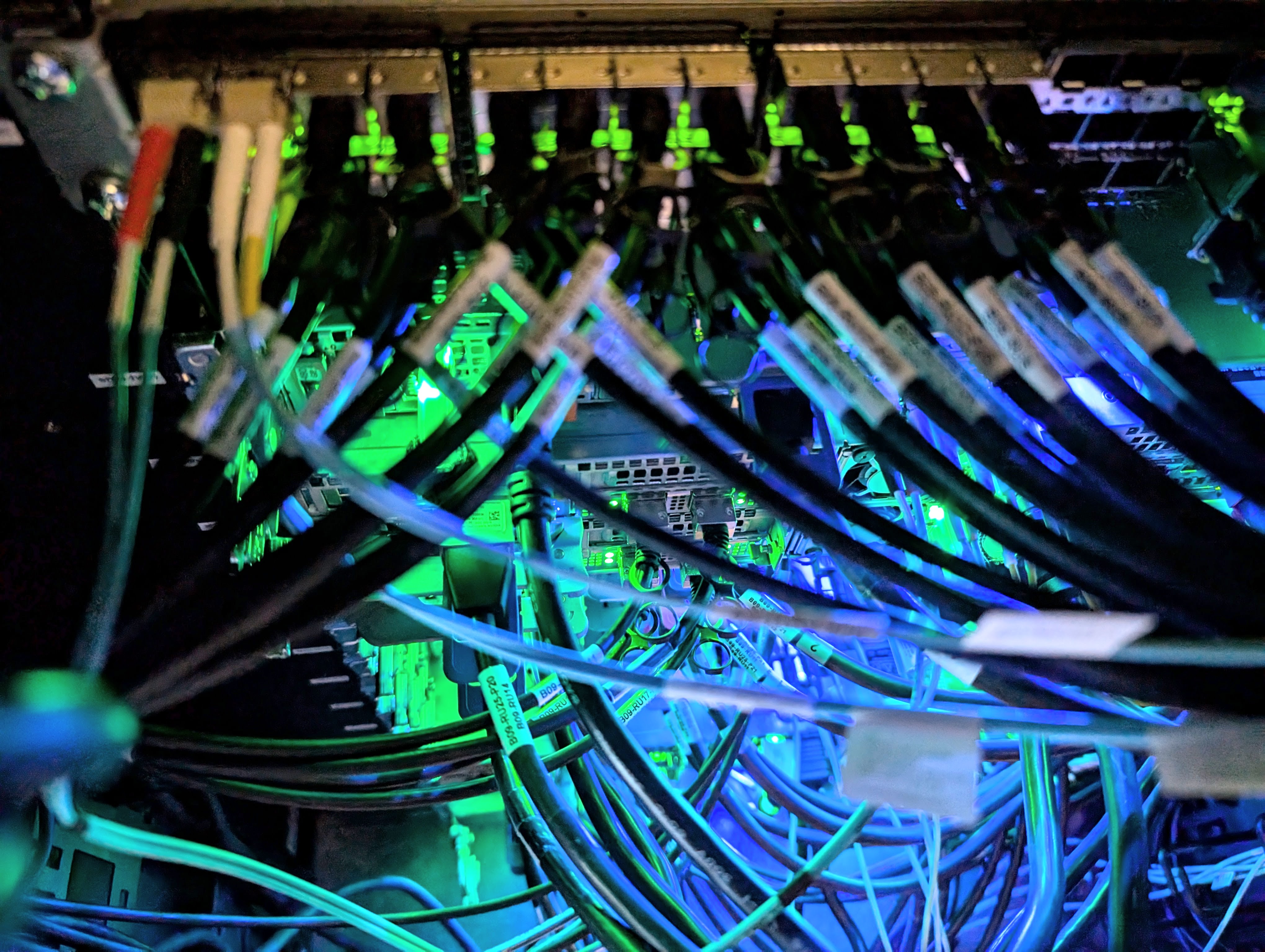

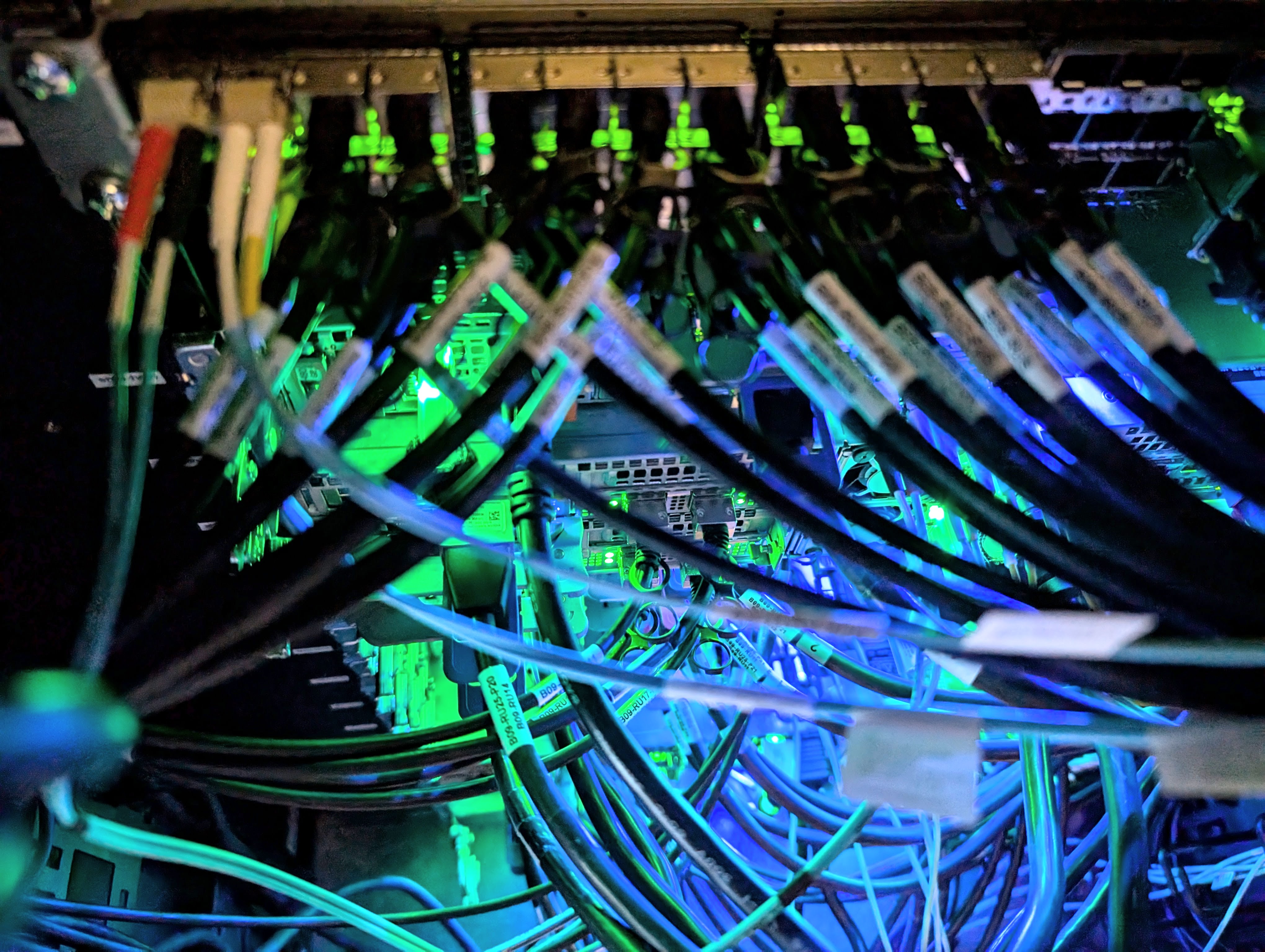

Sherlock 4.0: a new cluster generation

by Kilian Cavalotti, Technical Lead & Architect, HPC

We are thrilled to announce that Sherlock 4.0, the fourth generation of Stanford's High-Performance Computing cluster, is now live! This major upgrade represents a significant leap forward in our computing capabilities, offering researchers

Subscribe to updates

Subscribe to updates