Sherlock will not be available for login, to submit jobs or to access files from Saturday June 24th, 2023 at 00:00 PST to Monday July 3rd, 2023 at 18:00 PST.

Jobs will stop running and access to login nodes will be closed at 00:00 PST on Saturday, June 24th, to allow sufficient time for shutdown and pre-downtime maintenance tasks on the cluster, before the power actually goes out. If everything goes according to plan, and barring issues or delays with power availability, access will be restored on Monday, July 3rd at 18:00 PST.

We will use this opportunity to perform necessary maintenance operations on Sherlock that can’t be done while jobs are running, which will avoid having to schedule a whole separate downtime. Sherlock will go offline in advance of the actual electrical shutdown to ensure that all equipment is properly powered off and minimize the risks of disruption and failures when power is restored.

A reservation will be set in the scheduler for the duration of the downtime: if you submit a job on Sherlock and the time you request exceeds the time remaining until the start of the downtime, your job will be queued until the maintenance is over, and the squeue command will report a status of ReqNodeNotAvailable (“Required Node Not Available”).

The hours leading up to a downtime are an excellent time to submit shorter, smaller jobs that can complete before the maintenance begins: as the queues drain there will be many nodes available, and your wait time may be shorter than usual.

As previously mentioned, in anticipation of this week-long downtime, we encourage all users to plan their work accordingly, and ensure that they have contingency plans in place for their computing and data accessibility needs during that time. If you have important data that you need to be able to access while Sherlock is down, we strongly recommend that you start transferring your data to off-site storage systems ahead of time, to avoid last-minute complications. Similarly, if you have deadlines around the time of the shutdown that require computation results, make sure to anticipate those and submit your jobs to the scheduler as early as possible.

We understand that this shutdown will have a significant impact for users who rely on Sherlock for their computing and data processing needs, and we appreciate your cooperation and understanding as we work to improve our Research Computing infrastructure.

For help transferring data, any questions or concerns, please do not hesitate to reach out to [email protected].

Sherlock will not be available for login, to submit jobs or to access files from Saturday June 24th, 2023 at 00:00 PST to Monday July 3rd, 2023 at 18:00 PST.

Jobs will stop running and access to login nodes will be closed at 00:00 PST on Saturday, June 24th, to allow sufficient time for shutdown and pre-downtime maintenance tasks on the cluster, before the power actually goes out. If everything goes according to plan, and barring issues or delays with power availability, access will be restored on Monday, July 3rd at 18:00 PST.

We will use this opportunity to perform necessary maintenance operations on Sherlock that can’t be done while jobs are running, which will avoid having to schedule a whole separate downtime. Sherlock will go offline in advance of the actual electrical shutdown to ensure that all equipment is properly powered off and minimize the risks of disruption and failures when power is restored.

A reservation will be set in the scheduler for the duration of the downtime: if you submit a job on Sherlock and the time you request exceeds the time remaining until the start of the downtime, your job will be queued until the maintenance is over, and the squeue command will report a status of ReqNodeNotAvailable (“Required Node Not Available”).

The hours leading up to a downtime are an excellent time to submit shorter, smaller jobs that can complete before the maintenance begins: as the queues drain there will be many nodes available, and your wait time may be shorter than usual.

As previously mentioned, in anticipation of this week-long downtime, we encourage all users to plan their work accordingly, and ensure that they have contingency plans in place for their computing and data accessibility needs during that time. If you have important data that you need to be able to access while Sherlock is down, we strongly recommend that you start transferring your data to off-site storage systems ahead of time, to avoid last-minute complications. Similarly, if you have deadlines around the time of the shutdown that require computation results, make sure to anticipate those and submit your jobs to the scheduler as early as possible.

We understand that this shutdown will have a significant impact for users who rely on Sherlock for their computing and data processing needs, and we appreciate your cooperation and understanding as we work to improve our Research Computing infrastructure.

For help transferring data, any questions or concerns, please do not hesitate to reach out to [email protected].

normal partition on Sherlock is getting an upgrade!With the addition of 24 brand new SH3_CBASE.1 compute nodes, each featuring one AMD EPYC 7543 Milan 32-core CPU and 256 GB of RAM, Sherlock users now have 768 more CPU cores at there disposal. Those new nodes will complete the existing 154 compute nodes and 4,032 core in that partition, for a new total of 178 nodes and 4,800 CPU cores.

The

normal partition is Sherlock’s shared pool of compute nodes, which is available free of charge to all Stanford Faculty members and their research teams, to support their wide range of computing needs. In addition to this free set of computing resources, Faculty can supplement these shared nodes by purchasing additional compute nodes, and become Sherlock owners. By investing in the cluster, PI groups not only receive exclusive access to the nodes they purchased, but also get access to all of the other owner compute nodes when they're not in use, thus giving them access to the whole breadth of Sherlock resources, currently over over 1,500 compute nodes, 46,000 CPU cores and close to 4 PFLOPS of computing power.

We hope that this new expansion of the

normal partition, made possible thanks to additional funding provided by the University Budget Group as part of the FY23 budget cycle, will help support the ever-increasing computing needs of the Stanford research community, and enable even more breakthroughs and discoveries.As usual, if you have any question or comment, please don’t hesitate to reach out at [email protected].

]]>

normal partition on Sherlock is getting an upgrade!With the addition of 24 brand new SH3_CBASE.1 compute nodes, each featuring one AMD EPYC 7543 Milan 32-core CPU and 256 GB of RAM, Sherlock users now have 768 more CPU cores at there disposal. Those new nodes will complete the existing 154 compute nodes and 4,032 core in that partition, for a new total of 178 nodes and 4,800 CPU cores.

The

normal partition is Sherlock’s shared pool of compute nodes, which is available free of charge to all Stanford Faculty members and their research teams, to support their wide range of computing needs. In addition to this free set of computing resources, Faculty can supplement these shared nodes by purchasing additional compute nodes, and become Sherlock owners. By investing in the cluster, PI groups not only receive exclusive access to the nodes they purchased, but also get access to all of the other owner compute nodes when they're not in use, thus giving them access to the whole breadth of Sherlock resources, currently over over 1,500 compute nodes, 46,000 CPU cores and close to 4 PFLOPS of computing power.

We hope that this new expansion of the

normal partition, made possible thanks to additional funding provided by the University Budget Group as part of the FY23 budget cycle, will help support the ever-increasing computing needs of the Stanford research community, and enable even more breakthroughs and discoveries.As usual, if you have any question or comment, please don’t hesitate to reach out at [email protected].

]]>

So today, we’re introducing a new Sherlock catalog refresh, a Sherlock 3.5 of sorts.

The new catalog

So, what changes? What stays the same?

In a nutshell, you’ll continue to be able to purchase the existing node types that you’re already familiar with:

CPU configurations:

CBASE: base configuration ($)CPERF: high core-count configuration ($$)CBIGMEM: large-memory configuration ($$$$)

GPU configurations

G4FP32: base GPU configuration ($$)G4TF64: HPC GPU configuration ($$$)G8TF64: best-in-class GPU configuration ($$$$)

But they now come with better and faster components!

To avoid confusion, the configuration names in the catalog will be suffixed with a index to indicate the generational refresh, but will keep the same global denomination. For instance, the previous SH3_CBASE configuration is now replaced with a SH3_CBASE.1 configuration that still offers 32 CPU cores and 256 GB of RAM.

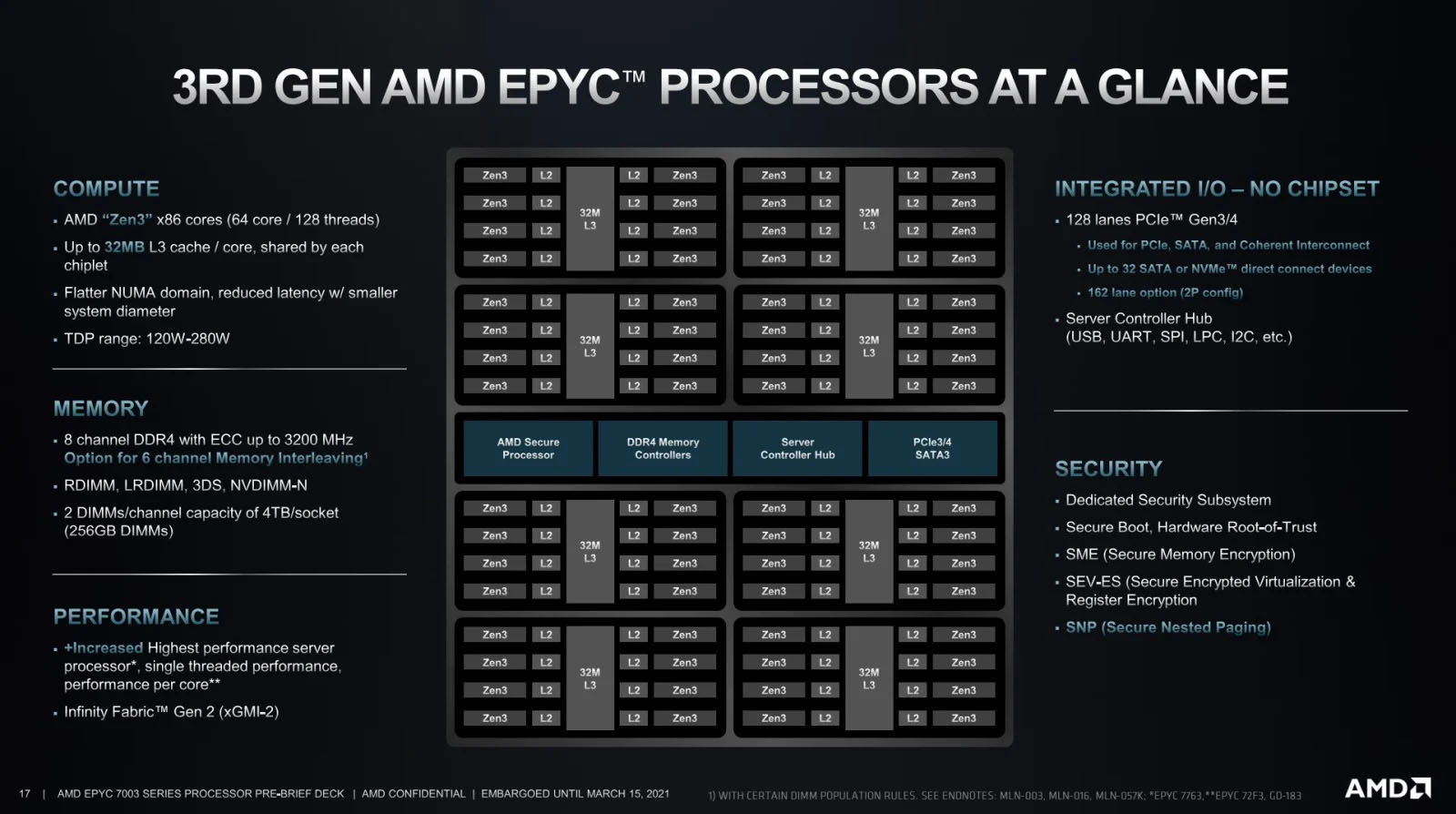

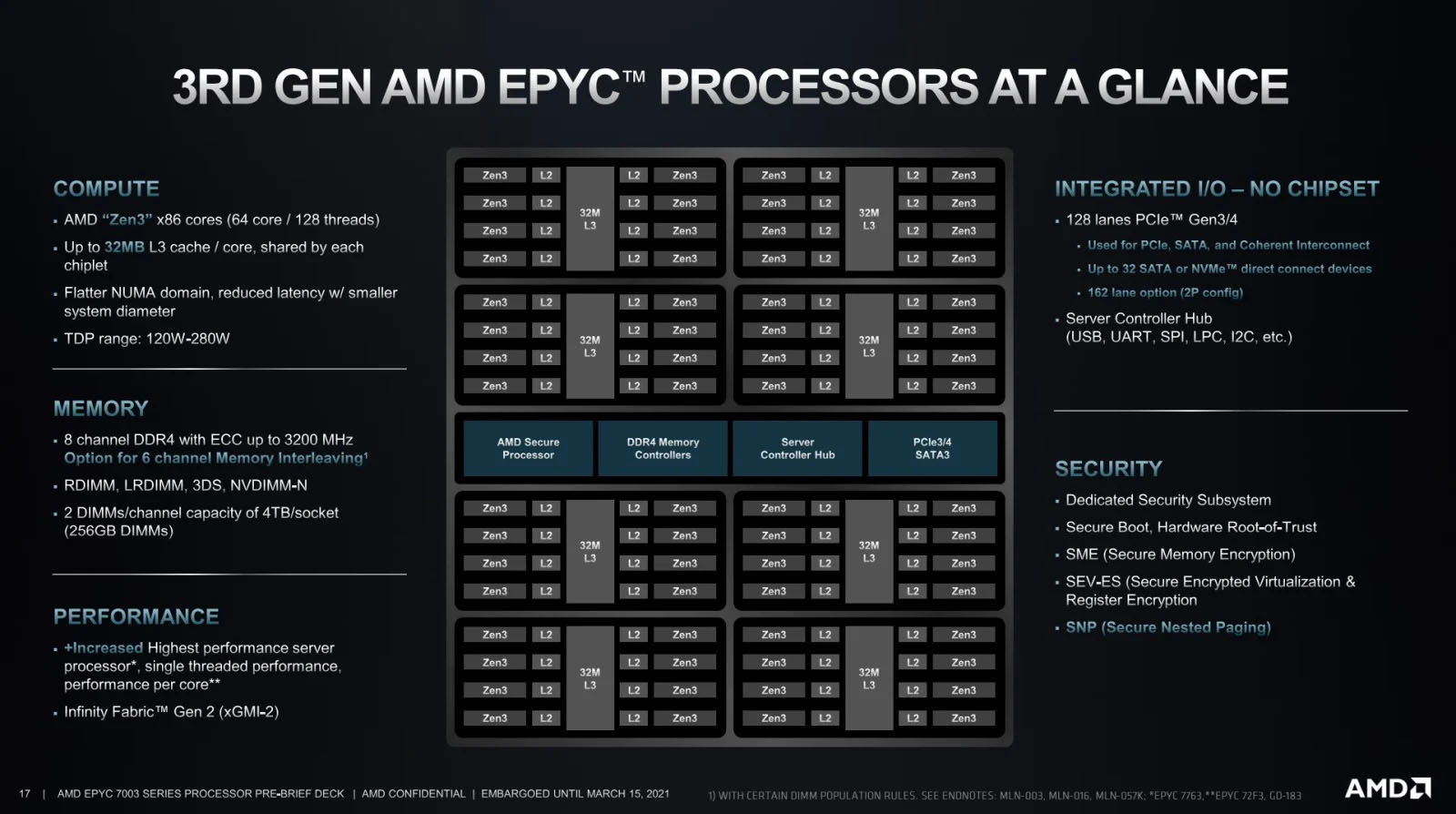

A new CPU generation

The main change in the existing configuration is the introduction of the new AMD 3rd Gen EPYC Milan CPUs. In addition to the advantages of the previous Rome CPUs, this new generation brings:

a new micro-architecture (Zen3)

a ~20% performance increase in instructions completed per clock cycle (IPC)

enhanced memory performance, with a unified 32 MB L3 cache

improved CPU clock speeds

More specifically, for Sherlock, the following CPU models are now used:

Model | Sherlock 3.0 (Rome) | Sherlock 3.5 (Milan) |

|---|---|---|

| 1× 7502 (32-core, 2.50GHz) | 1× 7543 (32-core, 2.75GHz) |

| 2× 7742 (64-core, 2.25GHz) | 2× 7763 (64-core, 2.45GHz) |

| 2× 7502 (32-core, 2.50GHz) | 2× 7543 (32-core, 2.75GHz) |

| 1× 7502 (32-core, 2.50GHz) | 1× 7543 (32-core, 2.75GHz) |

| 2× 7502 (32-core, 2.50GHz) | 2× 7543 (32-core, 2.75GHz) |

| 2× 7742 (64-core, 2.25GHz) | 2× 7763 (64-core, 2.45GHz) |

In addition to IPC and L3 cache improvements, the new CPUs also bring a frequency boost that will provide a substantial performance improvement.

New GPU options

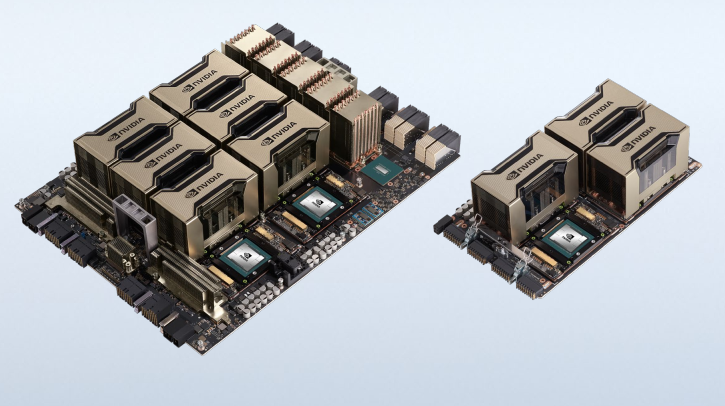

On the GPU front, the two main changes are the re-introduction of the G4FP32 model, and the doubling of GPU memory all across the board.

GPU memory is quickly becoming the constraining factor for training deep-learning models that keep increasing in size. Having large amounts of GPU memory is now key for running medical imaging workflows, computer vision models, or anything that requires processing large images.

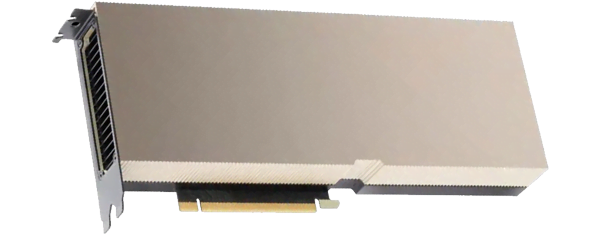

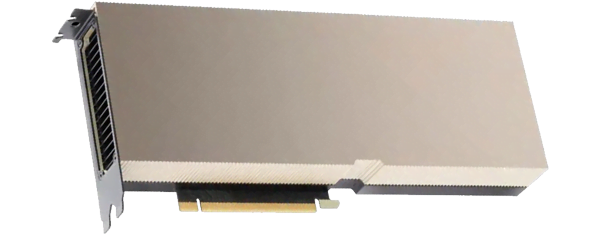

The entry-level G4FP32 model is back in the catalog, with a new NVIDIA A40 GPU in an updated SH3_G4FP32.2 configuration. The A40 GPU not only provides higher performance than the previous model it replaces, but it also comes with twice as much GPU memory, with a whopping 48GB of GDDR6.

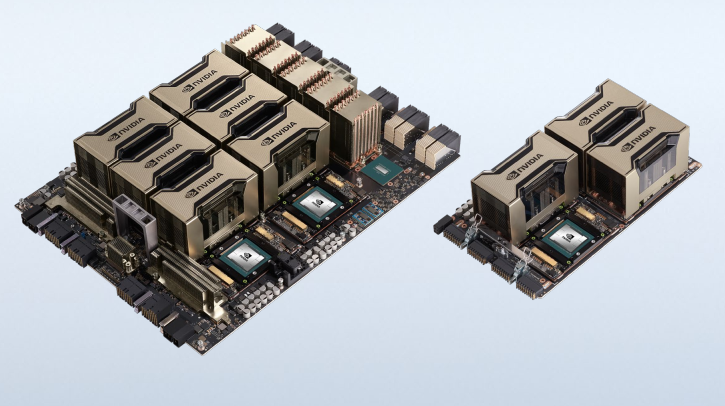

The higher-end G4TF64 and G8TF64 models have also been updated with newer AMD CPUs, as well as updated versions of the NVIDIA A100 GPU, now each featuring a massive 80GB of HBM2e memory.

Get yours today!

For more details and pricing, please check out the Sherlock catalog (SUNet ID required).

If you’re interested in getting your own compute nodes on Sherlock, all the new configurations are available for purchase today, and can be ordered online though the Sherlock order form (SUNet ID required).

As usual, please don’t hesitate to reach out if you have any questions!

So today, we’re introducing a new Sherlock catalog refresh, a Sherlock 3.5 of sorts.

The new catalog

So, what changes? What stays the same?

In a nutshell, you’ll continue to be able to purchase the existing node types that you’re already familiar with:

CPU configurations:

CBASE: base configuration ($)CPERF: high core-count configuration ($$)CBIGMEM: large-memory configuration ($$$$)

GPU configurations

G4FP32: base GPU configuration ($$)G4TF64: HPC GPU configuration ($$$)G8TF64: best-in-class GPU configuration ($$$$)

But they now come with better and faster components!

To avoid confusion, the configuration names in the catalog will be suffixed with a index to indicate the generational refresh, but will keep the same global denomination. For instance, the previous SH3_CBASE configuration is now replaced with a SH3_CBASE.1 configuration that still offers 32 CPU cores and 256 GB of RAM.

A new CPU generation

The main change in the existing configuration is the introduction of the new AMD 3rd Gen EPYC Milan CPUs. In addition to the advantages of the previous Rome CPUs, this new generation brings:

a new micro-architecture (Zen3)

a ~20% performance increase in instructions completed per clock cycle (IPC)

enhanced memory performance, with a unified 32 MB L3 cache

improved CPU clock speeds

More specifically, for Sherlock, the following CPU models are now used:

Model | Sherlock 3.0 (Rome) | Sherlock 3.5 (Milan) |

|---|---|---|

| 1× 7502 (32-core, 2.50GHz) | 1× 7543 (32-core, 2.75GHz) |

| 2× 7742 (64-core, 2.25GHz) | 2× 7763 (64-core, 2.45GHz) |

| 2× 7502 (32-core, 2.50GHz) | 2× 7543 (32-core, 2.75GHz) |

| 1× 7502 (32-core, 2.50GHz) | 1× 7543 (32-core, 2.75GHz) |

| 2× 7502 (32-core, 2.50GHz) | 2× 7543 (32-core, 2.75GHz) |

| 2× 7742 (64-core, 2.25GHz) | 2× 7763 (64-core, 2.45GHz) |

In addition to IPC and L3 cache improvements, the new CPUs also bring a frequency boost that will provide a substantial performance improvement.

New GPU options

On the GPU front, the two main changes are the re-introduction of the G4FP32 model, and the doubling of GPU memory all across the board.

GPU memory is quickly becoming the constraining factor for training deep-learning models that keep increasing in size. Having large amounts of GPU memory is now key for running medical imaging workflows, computer vision models, or anything that requires processing large images.

The entry-level G4FP32 model is back in the catalog, with a new NVIDIA A40 GPU in an updated SH3_G4FP32.2 configuration. The A40 GPU not only provides higher performance than the previous model it replaces, but it also comes with twice as much GPU memory, with a whopping 48GB of GDDR6.

The higher-end G4TF64 and G8TF64 models have also been updated with newer AMD CPUs, as well as updated versions of the NVIDIA A100 GPU, now each featuring a massive 80GB of HBM2e memory.

Get yours today!

For more details and pricing, please check out the Sherlock catalog (SUNet ID required).

If you’re interested in getting your own compute nodes on Sherlock, all the new configurations are available for purchase today, and can be ordered online though the Sherlock order form (SUNet ID required).

As usual, please don’t hesitate to reach out if you have any questions!